One of the things that fascinates me about NASA’s early manned programs is the risks the organization took to achieve its goals. The Apollo Program is a great example: NASA had a goal, a time frame in which to achieve its goal, and a real need to succeed. The risks could be justified in the name of a successful end-of-decade lunar landing. But the organization also had the money needed to achieve such a technological feat – roughly 4 percent of the GDP in the mid-1960s instead of the less than 1 percent it has now. (Pictured, engineers and astronauts begin troubleshooting in the minutes after an explosion rocked Apollo 13. 1970.)

One of the things that fascinates me about NASA’s early manned programs is the risks the organization took to achieve its goals. The Apollo Program is a great example: NASA had a goal, a time frame in which to achieve its goal, and a real need to succeed. The risks could be justified in the name of a successful end-of-decade lunar landing. But the organization also had the money needed to achieve such a technological feat – roughly 4 percent of the GDP in the mid-1960s instead of the less than 1 percent it has now. (Pictured, engineers and astronauts begin troubleshooting in the minutes after an explosion rocked Apollo 13. 1970.)

Still, it wasn’t just having enough money to run the tests needed to get the results. NASA made bold, daring decisions in the 60s. Since the end of Apollo, however, NASA has become more conservative in its approach to both manned spaceflight and unmanned planetary exploration.

Spaceflight is inherently risky – there’s no way to switch out a warhead for a man on a ballistic missile and call it 100 percent safe. In its early years, NASA set standards to minimize risk. All spacecraft components were held to the rule of “three nines”. Ideally, each piece of hardware and software had to be 99.9 percent reliable. This translates roughly to a 1 in 1,000 chance of failure.

The rule of “three nines” was ideal but not always achievable. Instead, NASA stove for the most reliable system possible with the lowest fail rate. Still, with high reliability and ample redundancies in place, NASA couldn’t prevail over all risks.

In January 1967, a flaw in the hatch design of the Apollo command module (CM) contributed to the death of the Apollo 1 crew. A fire on the launch pad during a routine test increased the pressure inside the crew cabin. From their supine position on their couches, the astronauts wrestled with the inward opening hatch to no avail. They couldn’t pull against the increasing pressure from that position. Such a basic design flaw necessitated a major redesign. Adding the loss of a crew to the problem meant no crew would fly until NASA was convinced its new system was reliable. (Picture, the view inside the command module of Apollo 15. The position of the astronauts relative to the hatch is clearly visible. 1971.)

In January 1967, a flaw in the hatch design of the Apollo command module (CM) contributed to the death of the Apollo 1 crew. A fire on the launch pad during a routine test increased the pressure inside the crew cabin. From their supine position on their couches, the astronauts wrestled with the inward opening hatch to no avail. They couldn’t pull against the increasing pressure from that position. Such a basic design flaw necessitated a major redesign. Adding the loss of a crew to the problem meant no crew would fly until NASA was convinced its new system was reliable. (Picture, the view inside the command module of Apollo 15. The position of the astronauts relative to the hatch is clearly visible. 1971.)

But the overall program didn’t lag. Unmanned tests continued. Saturn V launches and shakedowns of unmanned spacecraft components in orbit dominated the Apollo launch schedule in the months that followed.

Apollo 7 was the first manned mission to fly after the Apollo 1 fire in the newly designed spacecraft. Taking into account the total redesign of the Apollo CM, NASA resumed manned flight in a short time frame. Apollo 7 launched after a one year and ten month hiatus in October 1968.

In the period correcting the cause of the Apollo 1 fire, NASA didn’t lose sight of the lunar goal. In the months prior to Apollo 7’s launch, the organization received intelligence that the Soviet Union had a new spacecraft capable of a lunar mission. The Zond spacecraft was big enough to carry three men to the moon and back. The Soviet Space program had proved the spacecraft’s capability with the Zond 5 mission; it travelled to the moon, swung around its far side, and returned.

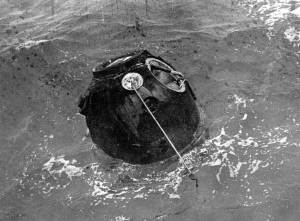

The Soviet agenda was clear. The next step for Zond was to repeat the same lunar mission with a crew. After that, a landing was imminent. It seemed like the Soviet Union was poised to regain its lead over America in space. (Right, Zond 5.)

The Soviet agenda was clear. The next step for Zond was to repeat the same lunar mission with a crew. After that, a landing was imminent. It seemed like the Soviet Union was poised to regain its lead over America in space. (Right, Zond 5.)

The renewed Soviet threat with Zond increased the pressure on Apollo. The intelligence reached NASA midway through 1968. Unfortunately, the organization was not in a position to immediate respond to the new threat. The lunar module (LM) was behind schedule; it hadn’t yet been testing in flight by a crew. NASA had no way to bump up their attempt at a Lunar landing.

Landing a man on the moon was impossible without a working LM, but getting a man to the moon would but NASA some time.

And so the organization made a bold decision. If Apollo 7 was successful in proving the new Apollo spacecraft was truly flight worthy, Apollo 8 would go to the moon without an LM.

The easy option was a free-return trajectory mission – the crew could use the Moon’s gravity to slingshot them around the moon and send them right back from whence they came. It would be exactly what the unmanned Zond had done. But that wasn’t good enough for NASA and mission planners quickly upped the ante. If the crew was going to go a quarter of a million miles away, they were going to stay there for a while. Apollo 8 would enter into lunar orbit before returning home.

The mission was filled with unknowns adding to the risk. The Saturn V had never taken a manned crew into orbit. No crew had ever left the Earth’s orbit. No crew had entered into orbit around another body in the solar system. Perhaps most hazardous was that no crew had returned from orbit around another body. If the spacecraft’s one main engine failed to ignite, the astronauts would be stuck in lunar orbit forever. No spacecraft, manned or unmanned, had returned from such a mission.

The mission was successful. The US held onto their lead in space. After Apollo 8, the program progressed rapidly. In 1969, four Apollo missions launched, three of which went to the moon including the first two landings.

NASA hit another setback in April 1971 with its first near-disaster in space. Apollo 13 threw a wrench into the well-oiled lunar-landing machine. A ruptured oxygen tank crippled the main spacecraft – the oxygen tanks were integral the to spacecraft’s power system. Within minutes of the incident, the lunar goal was replaced with the goal of returning the crew alive. (Pictured is Apollo 13’s damaged service module. 1970.)

NASA hit another setback in April 1971 with its first near-disaster in space. Apollo 13 threw a wrench into the well-oiled lunar-landing machine. A ruptured oxygen tank crippled the main spacecraft – the oxygen tanks were integral the to spacecraft’s power system. Within minutes of the incident, the lunar goal was replaced with the goal of returning the crew alive. (Pictured is Apollo 13’s damaged service module. 1970.)

Engineers worked around the clock to come up with creative and often untested solutions to the new mission objective. They developed ways to extend the capabilities of the LM to use it as a lifeboat. They tried completely untested fixes for problems no one had even thought of.

The problem was identified as a fluke in the spacecraft, not a design flaw like that that claimed the lives of the Apollo 1 crew. As such, there was no need to redesign the spacecraft. Within 10 months, Apollo 14 flew to the Moon with the same components in their spacecraft.

The decisions to send Apollo 8 to the moon and fly Apollo 14 so soon after Apollo 13 are perhaps two of the most recognizable risks taken during the lunar program. Individual missions were without glitches, often requiring engineering decisions that were entirely untested and dangerous. But it is these larger scale episodes that speak to NASA’s willingness to proceed in spite of the dangers.

By the early 1970s, with the lunar goal in the bag, NASA began to lose funding. The last three Apollo missions – 18, 19, and 20 – were cancelled. NASA embarked on its first orbital space station, Skylab; crews flew in leftover Apollo spacecraft to reach the space station in orbit. The program was short-lived with only three missions. Nevertheless, Skylab retained the spirit that had enabled NASA to take on risky missions. When the solar panels on the first unmanned Skylab failed to open, there was only one solution. NASA sent a crew up to fix it by hand. (Pictured, Skylab as seen from the Skylab 3 command module. 1973.)

By the early 1970s, with the lunar goal in the bag, NASA began to lose funding. The last three Apollo missions – 18, 19, and 20 – were cancelled. NASA embarked on its first orbital space station, Skylab; crews flew in leftover Apollo spacecraft to reach the space station in orbit. The program was short-lived with only three missions. Nevertheless, Skylab retained the spirit that had enabled NASA to take on risky missions. When the solar panels on the first unmanned Skylab failed to open, there was only one solution. NASA sent a crew up to fix it by hand. (Pictured, Skylab as seen from the Skylab 3 command module. 1973.)

The end of Skylab was due in large part to NASA’s upcoming new program that promised to take spaceflight into new directions – the Space Shuttle was first launched in 1981.

The Space Shuttle ushered in a new era. There was no longer an overarching goal for NASA, nor was there an adversary to beat. There were also a different people in charge. The tone of NASA had changed. Risk was still an inherent part of spaceflight, but NASA seemed more cautious with Apollo behind it.

NASA faced the risk of its new vehicle early on in the Shuttle program. In 1986, a faulty seal in one of the Space Shuttle Challenger’s external solid boosters led to an explosion shortly after launch. All seven crewmembers of the STS-25 mission perished. The resulting inquiry into the disaster set NASA back.

Manned shuttle flights didn’t resume two years and ten months. STS-26 launched in September 1988. Interestingly, they didn’t rewrite any software or make any major design changes to the vehicle. The delay was over a year longer than that following the Apollo 1 fire, which had required significant redesigns. (Right, the explosion that claimed Challenger. 1986.)

Manned shuttle flights didn’t resume two years and ten months. STS-26 launched in September 1988. Interestingly, they didn’t rewrite any software or make any major design changes to the vehicle. The delay was over a year longer than that following the Apollo 1 fire, which had required significant redesigns. (Right, the explosion that claimed Challenger. 1986.)

A second Shuttle was lost in January 2003; the space shuttle Columbia broke up during reentry over Texas. A similarly long delay followed with the next manned Shuttle launch two years and six months later in July of 2005.

NASA’s caution can also be seen in its unmanned planetary missions. The need to fix stuck or broken Mars rover is a good example. This is, admittedly, a different situation. A crew can’t be sent up to Mars to fix a broken rover. The forty-minute communications delay makes real-time fixes from Earth impossible. Still, there is not human life at stake. A major problem on Mars could cause some men on Earth to lose their jobs, but no one would be stranded to die in lunar orbit.

Both MER rovers Spirit and Opportunity have experienced problems during their now seven-year stay on Mars (although Spirit has stopped communicating with Earth).

Spirit was the first to run into trouble. Within its first Martian fortnight, a software problem crippled the rover – it stopped communicating with Earth and its onboard computer seemed stuck in a constant reboot cycle. This strained the batteries, keeping the rover awake during the Martian night instead of ‘resting’ and recharging its batteries. With every Earth-day that passed, while engineers and programmers worked out the problem, the rover drained more power.

The team finally decided to force a reboot command that would hopefully prevent the whole rover software from crashing. It was the only option; if they kept waiting the rover would deplete its own power source soon enough. The reboot worked, and NASA gradually regained communication with, and control of, Spirit.

Opportunity ran into problems later in its life. One of the rover’s first major targets was Endurance Crater, a deep crater with exposed rock – exactly what the scientists behind the MER program were after. The majority wanted to proceed immediately into the crater; many were anxious to begin testing the exposed rock before the rover started losing power. The trouble with the crater, however, was its apparent size and steep walls. Images from the rover showed indicated that the rover would have to descend a significant slope to enter the crater. If it turned out that the rover could descend the steep walls, it could be stuck. No one could be certain it would be able to get back out again. (Pictured, an Earth-bound rover tests Opportunity‘s possible descent into Endurance Crater. 2004.)

Opportunity ran into problems later in its life. One of the rover’s first major targets was Endurance Crater, a deep crater with exposed rock – exactly what the scientists behind the MER program were after. The majority wanted to proceed immediately into the crater; many were anxious to begin testing the exposed rock before the rover started losing power. The trouble with the crater, however, was its apparent size and steep walls. Images from the rover showed indicated that the rover would have to descend a significant slope to enter the crater. If it turned out that the rover could descend the steep walls, it could be stuck. No one could be certain it would be able to get back out again. (Pictured, an Earth-bound rover tests Opportunity‘s possible descent into Endurance Crater. 2004.)

At the root of the issue wasn’t the fate of the rover. It was what Principle Investigator Steve Squyres called “issues of perception and blame”. The fear was that if the rover got stuck, it would look like his team failed, even if they did return significant scientific data before finding a final resting place in the crater. No one wanted to be the one who made the call that got the rover stuck in a crater forever.

And so he shifted the responsibility and asked his higher ups to do a formal assessment of the rover’s chances of survival both descending and reemerging from Endurance. This way, if Opportunity did get stuck, everyone who could be mad would have been included in the decision to go in the first place. From a professional standpoint, there was no risk. No one was going to lose their job.

But they did lose time. Before doing anything on Mars, engineers simulated the rover’s path into the crater on Earth using a concrete slabs held at varying angles by a forklift. One of the Earth-bound rovers tried to climb and descend the rocks at different angles in an attempt to figure out at what would be the safest entrance into the crater. The tests proved the rover was as surefooted as a mountain goat.

The tests lasted for a month after Opportunity first arrived at Endurance. Once they had to go ahead, the Opportunity team began a safe entrance into the crater. They drove a few metres in and back out. The next Martian day (commonly called a ‘sol’), they drove in a few more, and then back out again. In the end, the rover was just fine. After a little over six Earth-months in the crater, Opportunity emerged and went on its way. (Rover engineers prepare a mixture of sandy and powdery materials to simulate some difficult driving conditions on Mars. 2005.)

Spirit fell victim to another setback towards the end of its ‘life’ when its rear wheels got stuck in a sand dune. Like with its entrance into Endurance crater, NASA engineers worked out possible ways to free the rover on Earth. They put Earth-bound rovers through different manoeuvres in an attempt to free wheels stuck in sandboxes. Like with Opportunity’s entrance into Endurance, no one was willing to just have a go at the rover. Nothing was certain to work, and no one wanted to be the one to make the call that killed the rover.

After eight months of fruitless testing on Earth, the team was beginning to run out of options and time. The Martian winter was fast approaching and they had to move Spirit to orient its solar panels northward – solar power was necessary to save the computer during the cold winter. Finally, engineers began playing around with different wheel movements on Mars, moves that had never been tested in the Earth-bound sandbox. Sure enough, the drivers were able to free the rover from its sand trap on their first try.

The problems continue with Mars. The satellites currently in orbit around Mars are slated to be the communications link with the next round of rovers sent to the planet. Their onboard computers, however, are much older than their new rover counterparts, and their software is equally out of date.

The latter problem can be fixed; a software update could improve the performance of the Odyssey orbital satellite. But there is a risk. If the satellite rejects the software or if the upgrade causes some glitch, the principle communications link between the surfaces of Mars and Earth could be severed. NASA isn’t take chances; opting to stay with older, slower software is safer than risking an update.

Admittedly current space exploration, be it manned or unmanned, is a different endeavour presently than it was in the past. Most notable is the lack of an adversary pushing NASA to find solutions quickly. NASA now has time to work out the problems, almost negating the need for risky decisions.

This post has scratched the surface of what I think is a really interesting question in NASA’s history. This brief survey of events is little more than a framework; it doesn’t include risks taken by the Soviet program during the space race era or any details of fixes made following the major disasters such as Apollo 13 or the loss of Challenger and Columbia. I anticipate that I will revisit this overall topic in future research.

Selected Sources/Suggested Reading

1. Jim Lovell and Jeffrey Kluger. Lost moon: The perilous voyage of Apollo 13. New York, NY: Houghton Mifflin Co. 1994.

2. Chris Kraft. Flight: My Life in Mission Control. Penguin Putnam. 2002.

3. Gene Kranz. Failure is not an option: Thorndike Press. 2000.

4. Walter Schirra and Richard Billings. Schirra’s Space. Boston: Quinlan. 1988.

5. David Scott and Alexei Leonov. Two Sides of the Moon. New York: Thomas Dunne Books. 2004.

6. Alan Shepard and Deke Slayton. Moonshot; Inside America’s Race to the Moon. Turner Publishing, Kansas City. 1994.

7. Donald K. Slayton. Deke!. Forge: New York. 1994.

8. Nancy Conrad and Howard Klausner. Rocketman: Astronaut Pete Conrad’s Incredible Ride to the Moon and Beyond. NAL Hardcover. 2005.

9. Steve Squyres. Roving Mars. Hyperion. 2006.

10. Andrew Mishkin. Sojourner: An Insider’s View of the Mars Pathfinder Mission. Berkley Trade. 2004.

Again, thanks to Phil Christensen, principle investigator of the 2001 Mars Odyssey Thermal Emission Imaging System (THEMIS) instrument and the Thermal Emissions System (TES) instrument on the Mars Global Surveyor.

4 thoughts on “Spaceflight: Risky Business”